What are AI ‘world models’ and why do they matter?

Sources: https://techcrunch.com/2024/12/14/what-are-ai-world-models-and-why-do-they-matter

TL;DR

- World models (also called world simulators) are multimodal AI systems that build internal representations of how the world works to reason about consequences and plan actions.

- Proponents see them as a path toward more flexible, human-like AI capable of forecasting, planning, and interacting with physical and virtual environments, beyond surface-level pattern matching.

- Early demonstrations point to Sora (OpenAI) and a broader wave of investment in “large world models,” but the approach faces massive compute needs, data diversity requirements, and biases/hallucinations.

- For developers and enterprises, world models could improve video realism, robotics, and decision-making, but widespread adoption depends on data, tooling, and cost—experts caution that meaningful capabilities are still years away.

- The trajectory combines ambitious goals with substantial technical hurdles, including training at scale and aligning models with real-world physics and behavior.

Context and background

World models, also known as world simulators, aim to mirror a core human ability: forming internal representations of how the world behaves and using those representations to reason about future states and actions. The concept draws on longstanding ideas about mental models: our brains process sensory input and generate predictions that guide behavior without requiring explicit, conscious planning for every possible future. A well-cited illustration from researchers David Ha and Jürgen Schmidhuber compares a human batter’s reflexive swing to a model-based forecast of where a ball will land. They argue that top athletes rely on subconscious internal models to act quickly, not through exhaustive future planning. In AI, world models seek to capture something similar: an internal, actionable understanding of the world that supports rapid, goal-directed behavior. Interest in world models has grown in part because they promise capabilities that current generative systems struggle with. Much of today’s AI-generated video can look convincing or uncanny in the wrong ways, in part because the model reproduces surface patterns without understanding why objects move or interact the way they do. A world model with even a basic grasp of why a ball bounces could generate more plausible motion and interactions than a model that only learns correlations from pixels and text. Industry observers have pointed to the breadth of data used to train world models—photos, audio, videos, and text—in order to form richer internal representations of how the world works and how actions unfold. As one former Snap AI leader noted, a strong world model helps ensure that the observed world behaves in a way that aligns with our expectations when shown to a viewer. Those ambitions, if realized, could underpin more reliable simulations, planning, and decision-making across domains. In parallel, OpenAI has highlighted Sora as an example of a world model capable of simulating actions like painting brush strokes and even rendering game-like environments such as a Minecraft-style UI and world. Meta and other researchers have described the potential for world models to support forecasting and planning tasks in both digital and physical realms. Yann LeCun has framed a longer-term view where world models enable machines to remember, reason, and plan with intuition comparable to humans; he cautions that today’s AI systems are not yet at that level and that substantial progress remains.

What’s new

OpenAI’s Sora is cited as a concrete instance of a world-model approach, illustrating that such systems can go beyond static image or video generation to simulate actions and dynamics within a controlled environment. Sora’s demonstrated capabilities—such as painting-like actions on a canvas and rendering interactive game worlds—signal a shift from purely perceptual models to ones that reason about cause and effect in a structured way. OpenAI and others describe Sora—and related efforts—as world models, at least in spirit, even as practical products are in earlier stages. Industry commentary from Justin Johnson, a co-founder of World Labs, suggests that current and near-future world models will enable the creation of virtual, interactive worlds not just as images or clips, but as fully simulated environments. Johnson notes that the cost and development time of today’s virtual worlds are enormous, and world models could lower those barriers by providing on-demand, interactive simulations for gaming, virtual photography, and related applications. LeCun has described a vision in which world models could dramatically improve a system’s ability to reason and plan toward a desired goal. He acknowledged that the century-old aspiration of machines with human-level world understanding remains elusive and that we are likely a decade or more away from realizing his more expansive vision. Nonetheless, today’s world models are already being explored as elementary physics simulators and as engines for more robust reasoning about interactions. On the technical side, researchers note that Sora and similar models rely on large-scale computation. OpenAI and others emphasize that, while some language models can run on consumer devices, world models like Sora require substantial hardware—thousands of GPUs—for training and real-time inference if their use becomes commonplace. Training and running such models continues to be a computationally expensive proposition compared with many other AI workloads. Data limitations remain a central bottleneck. Even as a broad dataset is a prerequisite for a model to generalize across many scenarios, diversity must be coupled with specificity to ensure the model can deeply understand particular contexts. As Mashrabov, a tech executive with experience in AI, notes, models trained on limited demographics or environments may produce biased or inaccurate outputs. Runway’s CEO Cristóbal Valenzuela has echoed concerns that data and engineering hurdles currently prevent models from accurately capturing the behavior of real-world inhabitants, including humans and animals. If engineers overcome these hurdles, proponents believe world models could bridge AI and the real world more robustly—opening pathways not only in virtual world generation but also in robotics and AI decision-making. Some even anticipate that advanced world models could give robots a grounded, context-aware understanding of their environments, enabling more effective reasoning and problem solving.

What’s new (continued)

Despite the nascent state of the technology, executives and researchers view world models as a long-term bet with tangible early uses. They are already informing discussions about how AI could be deployed for forecasting, planning, and control tasks in both digital and physical spaces. The direction is to move from simply producing content to supporting agents that reason about the consequences of their actions and the structure of their environments.

Why it matters (impact for developers/enterprises)

If world models mature, they could reshape several practical domains:

- Forecasting and planning: A world model-based system could propose a sequence of actions to achieve a goal in a given environment, not merely replicate observed patterns. This has implications for automation, logistics, and decision-support systems.

- Robotics and embodied AI: The ability to form and use internal representations of the world could improve situational awareness for robots, enabling more robust navigation, manipulation, and interaction with humans and other agents.

- Video and interactive media: With deeper physical intuition, video synthesis and interactive simulations may become more coherent and realistic, reducing artifacts and improving user trust. Nonetheless, the path to enterprise-ready capabilities remains complex and costly. World models demand massive compute, broad yet precise training data, and sophisticated engineering to manage data biases and ensure the models’ representations align with real-world physics and social norms. Resources and tooling must mature before organizations can deploy these models at scale with predictable performance and governance. From an enterprise perspective, the headline takeaway is that world models promise a shift from static content generation toward systems that understand and reason about environments. The practical adoption curve will depend on data availability, cost-efficient compute, robust safety and bias controls, and developer tooling that makes building and integrating these models feasible at scale. A cautious but optimistic stance is common in industry discussions: the core ideas are compelling, but real-world impact will emerge gradually over years.

Technical details or Implementation

World models are described as multimodal systems that learn from photos, audio, video, and text to form internal representations of how the world works. These representations enable the model to reason about actions and their consequences, rather than merely predicting the next frame or caption.

- Data modalities: photos, audio, videos, and text are pooled to create a more grounded internal world representation. This multi-sensory data helps the model form cause-and-effect intuitions rather than surface-level correlations.

- Internal representations and reasoning: the goal is to move beyond pattern completion toward models that can simulate the outcomes of actions and plan a sequence of steps to achieve a goal, similar to how humans reason about changing states.

- Examples and demonstrations: Sora is highlighted as a world-model that can simulate painting strokes and render game-like environments. OpenAI views Sora as a world model capable of such dynamic simulation. The ability to render a Minecraft-like UI and game world demonstrates the potential for interactive, rule-based environments built on internal world representations.

- Practical challenges: world models require substantial compute, and even early attempts demand thousands of GPUs for training and inference if widespread use is intended. Training cost, inference latency, and scalability are central engineering challenges as these systems move from proof-of-concept to production. In addition, the field contends with hallucinations and biases inherited from training data, and a lack of data diversity can limit generalization to new environments or demographic groups.

- Data coverage and specificity: researchers emphasize that training data must be broad enough to cover diverse scenarios while also being specific enough to enable deep understanding of those scenarios. Without broad coverage and high-quality context, models may struggle to depict unfamiliar cities, climates, or human behaviors accurately.

- Hardware and economics: the industry notes the high cost of building and operating world-model systems. While consumer devices may host simpler models, world models as envisioned today would rely on large-scale infrastructure, at least in the near term. This has implications for budgeting, cloud usage, and the total cost of ownership.

Key considerations for implementation

- Interoperability with existing pipelines: integrating world-model components into current AI systems will require new interfaces for planning, simulation, and control, as well as mechanisms for monitoring and governance.

- Evaluation metrics: measuring the quality of world-model outputs involves more than perceptual realism; it requires assessing consistency with physical laws, causality, and the ability to plan successful action sequences.

- Data governance: to mitigate biases and ensure representativeness, organizations must curate datasets that cover a wide range of environments and populations, while respecting privacy and licensing.

- Safety and alignment: as with other AI systems, ensuring outputs align with human intent and safety norms will be a critical area of development.

Tables

| Aspect | Current status | Notes |---|---|---| | Compute requirements | Very high; training and running require substantial hardware | Sora and related models illustrate large-scale needs; thousands of GPUs may be required for practical deployment |Training data diversity | Biases and gaps are a risk | Data must cover varied scenarios and populations to reduce incorrect inferences |Data availability | Limited coverage can constrain performance | Broad, high-quality, and domain-specific data are needed |Capabilities today | Early demonstrations in simulation and video-like tasks | Moving from static generation toward dynamic reasoning and planning |Outlook | A decade-plus to reach broader human-like reasoning | Early physics-like simulators may appear sooner; broader capabilities evolve over time |

Key takeaways

- World models aim to endow AI with internal, causal representations of the world to reason about actions and outcomes, not just generate content.

- The approach is data- and compute-intensive, and practical deployment will hinge on scalable infrastructure and cost management.

- Early examples (e.g., Sora) show promise in simulating dynamics and interactive environments, but challenges around bias, hallucination, and generalization remain.

- The potential impact spans forecasting, robotics, and media generation, with the most concrete near-term gains likely in simulation fidelity and planning support rather than fully autonomous, human-like AI.

- Industry leaders caution that widespread, enterprise-grade world models are likely years away, underscoring a measured path from research to production.

FAQ

-

What is a world model?

A multimodal AI system that builds internal representations of how the world works to reason about consequences and to plan actions, trained on data such as photos, audio, videos, and text.

-

How do world models differ from traditional generative models?

World models aim to reason about dynamics and causality, enabling planning and action sequences, rather than solely generating perceptual outputs or next tokens.

-

What are the main challenges to commercialization?

Massive compute requirements, data diversity and coverage, biases and hallucinations, and the need for robust tooling and governance.

-

What is the near-term potential for enterprises?

Early benefits may come from improved simulation, forecasting, and planning in digital and physical environments, with broader adoption contingent on data, tooling, and cost reductions.

References

- TechCrunch: What are AI ‘world models’ and why do they matter? https://techcrunch.com/2024/12/14/what-are-ai-world-models-and-why-do-they-matter/

More news

Microsoft to turn Foxconn site into Fairwater AI data center, touted as world's most powerful

Microsoft unveils plans for a 1.2 million-square-foot Fairwater AI data center in Wisconsin, housing hundreds of thousands of Nvidia GB200 GPUs. The project promises unprecedented AI training power with a closed-loop cooling system and a cost of $3.3 billion.

Reddit Pushes for Bigger AI Deal with Google: Users and Content in Exchange

Reddit seeks a larger licensing deal with Google, aiming to drive more users and access to Reddit data for AI training, potentially via dynamic pricing and traffic incentives.

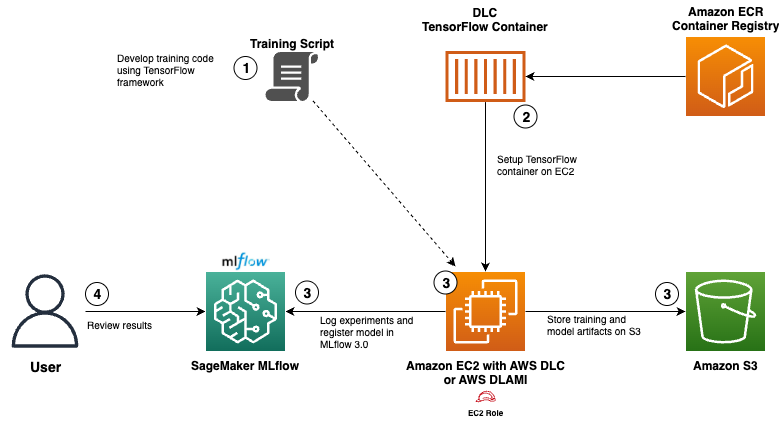

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.

GPT-5-Codex Addendum: Agentic Coding Optimized GPT-5 with Safety Measures

An addendum detailing GPT-5-Codex, a GPT-5 variant optimized for agentic coding within Codex, with safety mitigations and multi-platform availability.

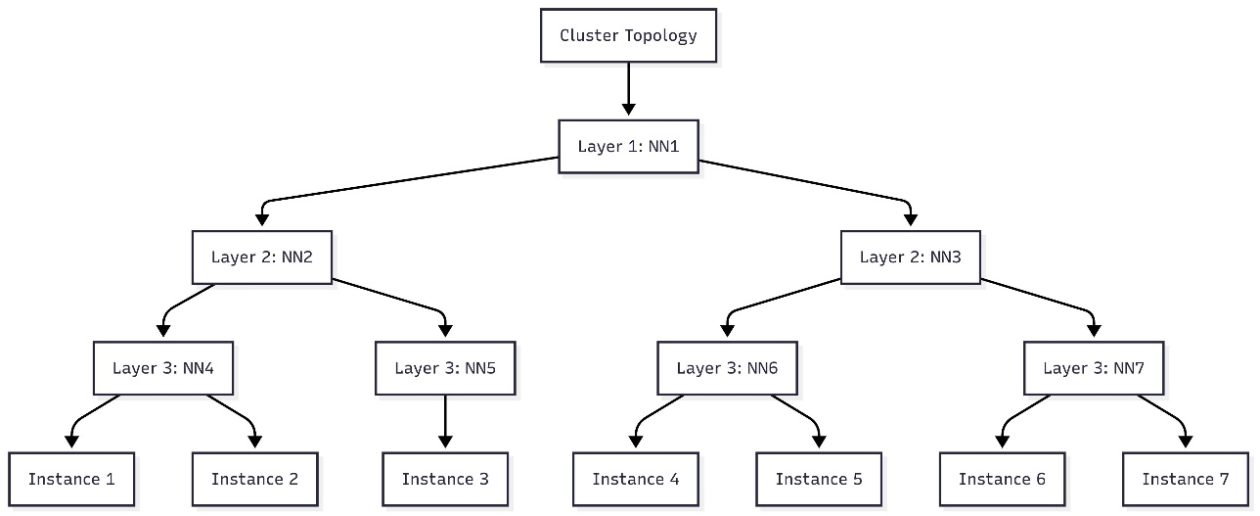

Schedule topology-aware workloads using Amazon SageMaker HyperPod task governance

AWS introduces topology-aware scheduling with SageMaker HyperPod task governance to optimize training efficiency and network latency on EKS clusters, using EC2 topology data to guide job placement.

How Quantization Aware Training Enables Low-Precision Accuracy Recovery

Explores quantization aware training (QAT) and distillation (QAD) as methods to recover accuracy in low-precision models, leveraging NVIDIA's TensorRT Model Optimizer and FP8/NVFP4/MXFP4 formats.