Anthology: Conditioning LLMs with Rich Backstories to Create Virtual Personas

Sources: http://bair.berkeley.edu/blog/2024/11/12/virutal-persona-llm

TL;DR

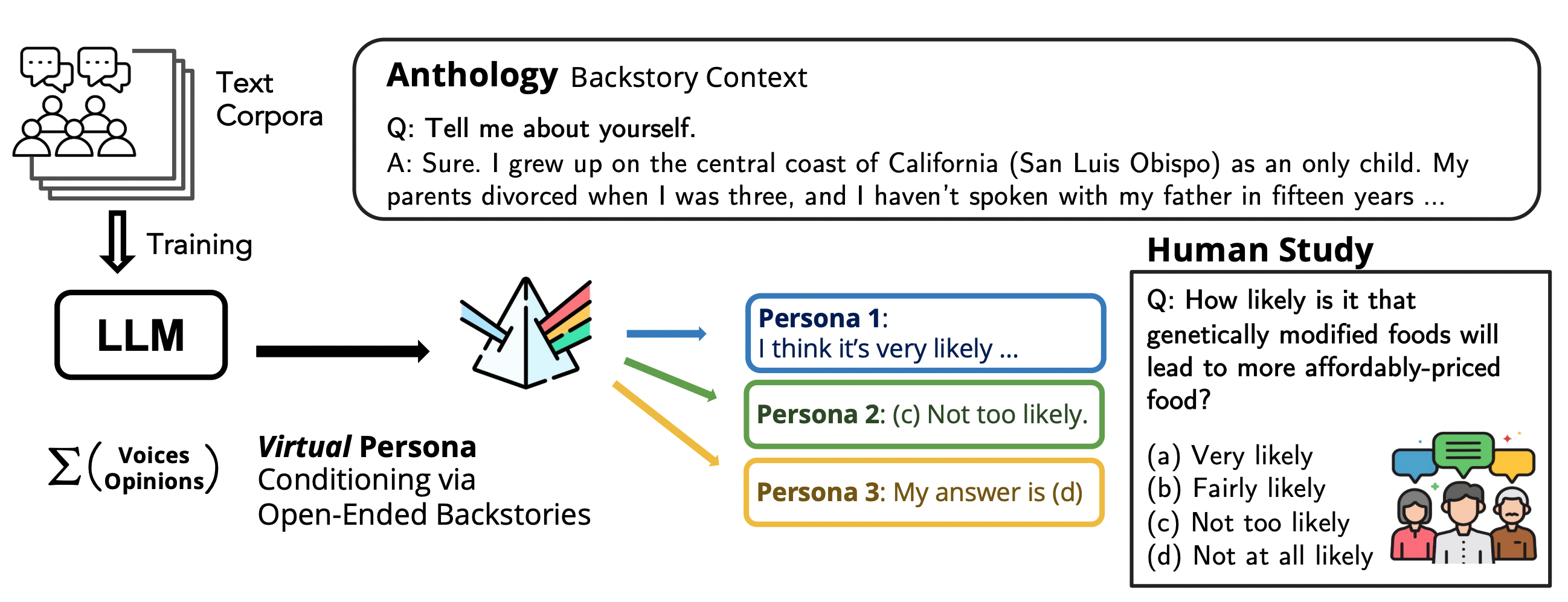

- Anthology conditions LLMs on richly detailed backstories to simulate individual human samples for surveys and social science studies.

- Backstories are generated by LLMs themselves through open-ended prompts such as “Tell me about yourself,” enabling coverage of a wide range of demographic attributes, backgrounds, and life philosophies.

- In evaluations using Pew Research Center ATP surveys (Waves 34, 92, and 99), Anthology outperforms other conditioning methods for both the Llama-3-70B and Mixtral-8x22B models; greedy matching often shows stronger average Wasserstein distance, while maximum weight matching has trade-offs.

- The approach offers a scalable, potentially ethical alternative to traditional human surveys, but care is needed to mitigate biases and privacy concerns; future work includes expanding backstories, adding free-form responses, and exploring longer-term simulations.

Context and background

The field has begun to view large language models (LLMs) as potential agent models: given a textual context, LLMs can generate conditional text that reflects the characteristics of an agent likely to have produced that context. This perspective suggests that, with appropriate conditioning, LLMs could be guided to approximate the responses of a particular human voice rather than the mixture of voices that typically emerges from broad-training data. If realized, this capability could have substantial implications for user research and social sciences, including the use of conditioned language models as virtual personas of human subjects for cost-effective pilot studies and ethical considerations aligned with Belmont principles of justice and beneficence. Anthology provides a concrete approach to this vision by grounding LLMs in richly detailed life narratives. Anthology proposes conditioning LLMs on representative, consistent, and diverse virtual personas by providing richly detailed backstories of individuals—complete with values, experiences, and life history—as conditioning context. This approach aims to achieve higher fidelity in simulating individual human samples, not just population-level tendencies. A key insight is that backstories generated from LLMs themselves can efficiently cover a wide range of demographics, enabling the creation of large sets of virtual personas that reflect nuanced demographic attributes and experiences. The practice of grounding language models in naturalistic narratives helps capture both explicit demographic markers and implicit references to cultural, socioeconomic backgrounds, and life philosophies. The authors contrast this with earlier conditioning methods that rely on short or broad demographic prompts (for example, “I am a 25-year-old from California. My highest level of education is less than high school.”). Those prompts encode demographic variables but do not provide a coherent, individualized narrative, limiting modeling to population-level approximations rather than individual-level fidelity. In contrast, Anthology seeks to approximate individual subjects by conditioning on richly detailed backstories, thereby enabling personalized simulation of responses in survey tasks. Anthology. The evaluation approach centers on aligning virtual personas with real-world survey samples. The team matches backstory-conditioned personas to Pew Research Center ATP survey responses, focusing on Waves 34, 92, and 99. This setup allows researchers to assess how well backstory-conditioned LLMs can reproduce the distributions and consistencies observed in human responses. The work also discusses methodological choices in matching strategies and evaluation metrics to understand the relative strengths of different conditioning methods.

What’s new

Anthology introduces several key novelties. First, it uses richly detailed, naturalistic backstories as conditioning context for LLMs, rather than relying on surface demographic labels or synthetic prompts. These backstories encode a range of demographic attributes as well as personal histories, values, and life philosophies, which helps the model generate more nuanced and individualized responses. Second, backstories are generated at scale by querying LLMs with unrestricted, open-ended prompts such as “Tell me about yourself.” This process yields a large corpus of backstories representing diverse human demographics, which can then be used to condition virtual personas. Third, the authors pair each backstory-conditioned persona with real-world survey samples and evaluate the fidelity of the simulated responses. The evaluation spans multiple model families, notably Llama-3-70B and Mixtral-8x22B, to demonstrate the generality of the approach across architectures. Fourth, the study compares conditioning methods, showing that Anthology consistently outperforms other conditioning approaches across all evaluation metrics. When comparing two matching strategies—greedy matching and maximum weight matching—greedy matching often yields stronger performance on average Wasserstein distance, while maximum weight matching imposes a one-to-one correspondence that can limit the strength of demographic similarity in some pairings. Finally, the work highlights the potential impact of rich backstories on the fidelity and usefulness of virtual personas for user research, public opinion surveys, and other social science applications. It also acknowledges ethical considerations and practical limits, including biases and privacy concerns, and positions Anthology as a scalable, potentially ethical alternative to traditional human surveys. Anthology. A table summarizing the approach and findings is included to illustrate the relationship between conditioning methods and observed outcomes. | Method | Key feature | Observed advantage |---|---|---| | Anthology | Rich, naturalistic backstories for conditioning | Outperforms other conditioning methods on all metrics for Llama-3-70B and Mixtral-8x22B |

Why it matters (impact for developers/enterprises)

Anthology points toward a scalable framework for creating virtual personas that can stand in for human subjects in pilot studies, user research, and certain public opinion tasks. By grounding LLMs in detailed life narratives, researchers can generate large sets of representative samples without direct interaction with human participants in every case. This has potential to reduce costs and accelerate exploratory work, while also enabling more controlled investigations into how demographic and psychosocial factors shape responses. However, the authors caution that “the generated backstories help create more representative personas, there remains a risk of perpetuating biases or infringing on privacy,” underscoring the need for careful interpretation and responsible use in practice. Anthology. For developers and organizations, the work suggests a pathway to scalable pilot studies and synthetic cohorts that can complement traditional human surveys. In enterprise settings, this could support market research, product testing, and policy-oriented research where rapid iteration and demographic breadth are valuable. At the same time, enterprises should be mindful of ethical and governance considerations, including privacy, bias mitigation, and compliance with relevant research ethics frameworks. The authors explicitly advocate for cautious interpretation of results and ongoing dialogue about the appropriate use of virtual personas in social science contexts. Anthology.

Technical details or Implementation

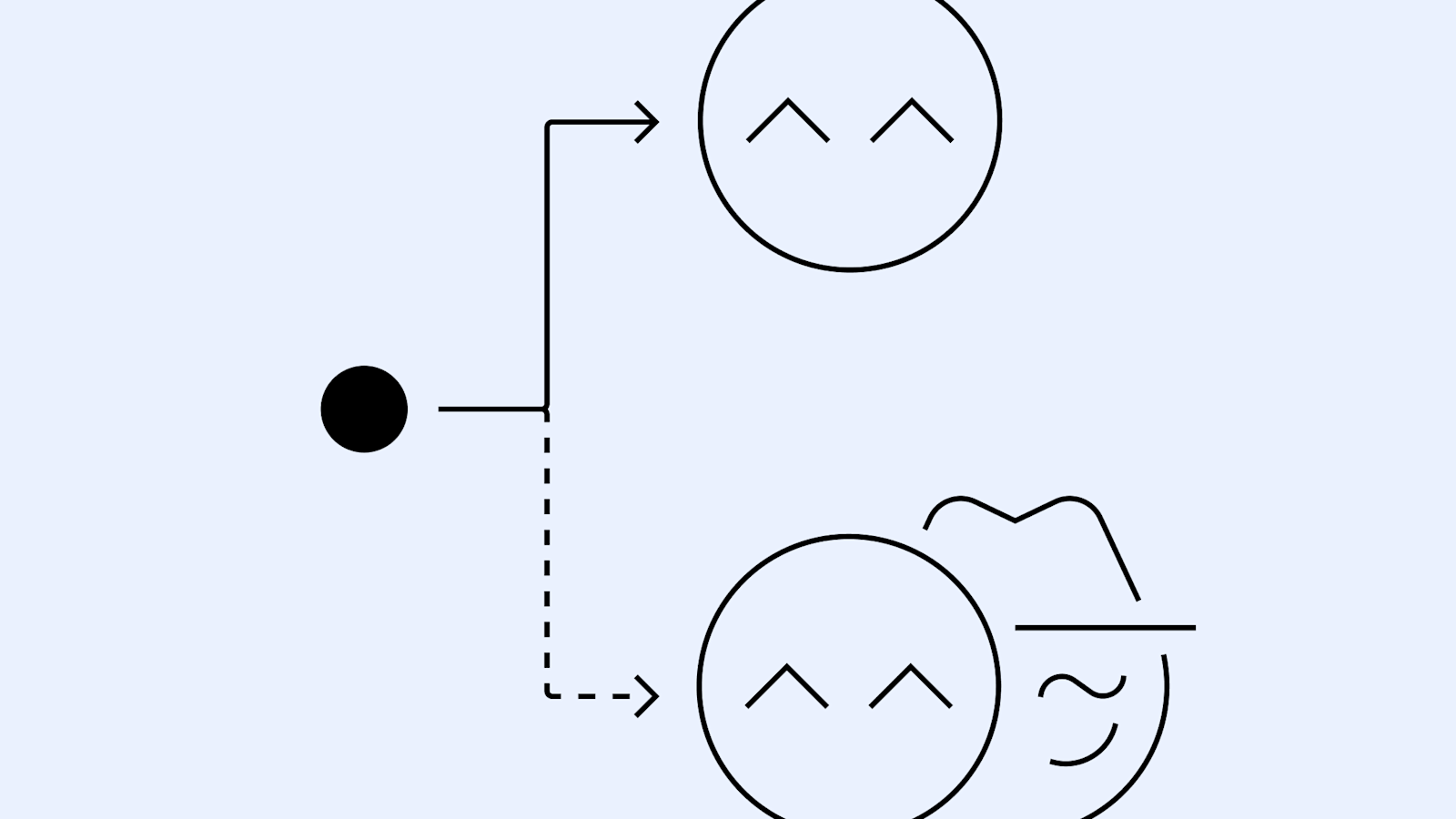

- Generate backstories: The core of Anthology is generating a vast set of backstories representing diverse demographic attributes by querying LLMs with unrestricted, open-ended prompts such as, “Tell me about yourself.” These backstories are designed to capture both explicit demographic traits and implicit cultural, socioeconomic, and life-philosophy cues.

- Conditioning flow: Each backstory serves as conditioning context for the LLM to generate responses that reflect the individual described. The goal is to have the model produce outputs aligned with the described life narrative, rather than a generic population-level response.

- Matching to real-world samples: The virtual personas conditioned by each backstory are matched to real-world survey samples (e.g., Pew ATP Waves) to evaluate fidelity in terms of distributional similarity and response consistency.

- Evaluation framework: Before analysis, lower-bound estimates for each metric are computed by randomly partitioning the human population into two equal groups and measuring the metric between the subgroups; this is repeated 100 times to obtain averaged lower-bound estimates. The approach benchmarks Anthology against other conditioning methods using multiple metrics, including the Wasserstein distance, across different model families.

- Matching strategies: Two matching strategies are considered. Greedy matching tends to yield stronger average Wasserstein distance across waves due to its more flexible one-to-many pairing, while maximum weight matching enforces a one-to-one correspondence that can dampen demographic similarity in some pairings. The differences between these methods are attributed to the theoretical and practical constraints of one-to-one matching versus the relaxing of constraints in greedy matching. Anthology.

- Outcomes and caveats: The results show that the richness of generated backstories elicits more nuanced responses and improves alignment with human data across several metrics and model types. Nevertheless, while Anthology offers a promising direction, risks around bias amplification and privacy remain, calling for cautious interpretation and responsible deployment. Anthology.

- Future directions: The authors envision expanding the backstory repertoire to be more expansive and diverse, adding free-form response generation to capture more natural interactions beyond structured surveys, and exploring longer-term simulations that model retrospective changes over time. These efforts, while technically challenging, are proposed as avenues to further improve fidelity and applicability. Anthology.

Key takeaways

- Rich backstories can meaningfully improve the fidelity of virtual personas in LLM conditioning compared with demographics alone.

- Backstory generation at scale enables coverage of a broad spectrum of human demographics and experiences.

- Evaluation against real survey data shows Anthology’s superiority over other conditioning methods across tested models, with matching strategy influencing results.

- The approach holds promise as a scalable, ethically conscious tool for user research and social science, but requires careful handling of privacy and bias concerns.

- Future work should broaden backstory diversity, enable free-form responses, and explore longer-term simulation effects to capture dynamics over time. Anthology.

FAQ

-

What is Anthology and what problem does it address?

Anthology is an approach to conditioning LLMs on richly detailed backstories to simulate representative, consistent, and diverse individual human subjects for surveys and social science research. [Anthology](http://bair.berkeley.edu/blog/2024/11/12/virutal-persona-llm/).

-

How are the backstories created and used?

Backstories are generated by LLMs in response to open-ended prompts such as “Tell me about yourself,” yielding narratives that cover a range of demographics and life experiences, which then condition the virtual personas used in response tasks. [Anthology](http://bair.berkeley.edu/blog/2024/11/12/virutal-persona-llm/).

-

What surveys were used for evaluation, and what models were tested?

The evaluation used Pew Research Center ATP surveys across Waves 34, 92, and 99, testing with models including Llama-3-70B and Mixtral-8x22B to compare conditioning methods. [Anthology](http://bair.berkeley.edu/blog/2024/11/12/virutal-persona-llm/).

-

What are the main benefits and risks for practitioners?

Benefits include scalable, potentially cost-effective pilot studies and richer representations of individual responses; risks involve potential biases and privacy concerns that require cautious interpretation and governance. [Anthology](http://bair.berkeley.edu/blog/2024/11/12/virutal-persona-llm/).

-

What are the suggested future directions?

Expanding the backstory set, enabling free-form responses, and exploring longer-term simulation effects to model changes over time. [Anthology](http://bair.berkeley.edu/blog/2024/11/12/virutal-persona-llm/).

References

More news

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

Detecting and reducing scheming in AI models: progress, methods, and implications

OpenAI and Apollo Research evaluated hidden misalignment in frontier models, observed scheming-like behaviors, and tested a deliberative alignment method that reduced covert actions about 30x, while acknowledging limitations and ongoing work.

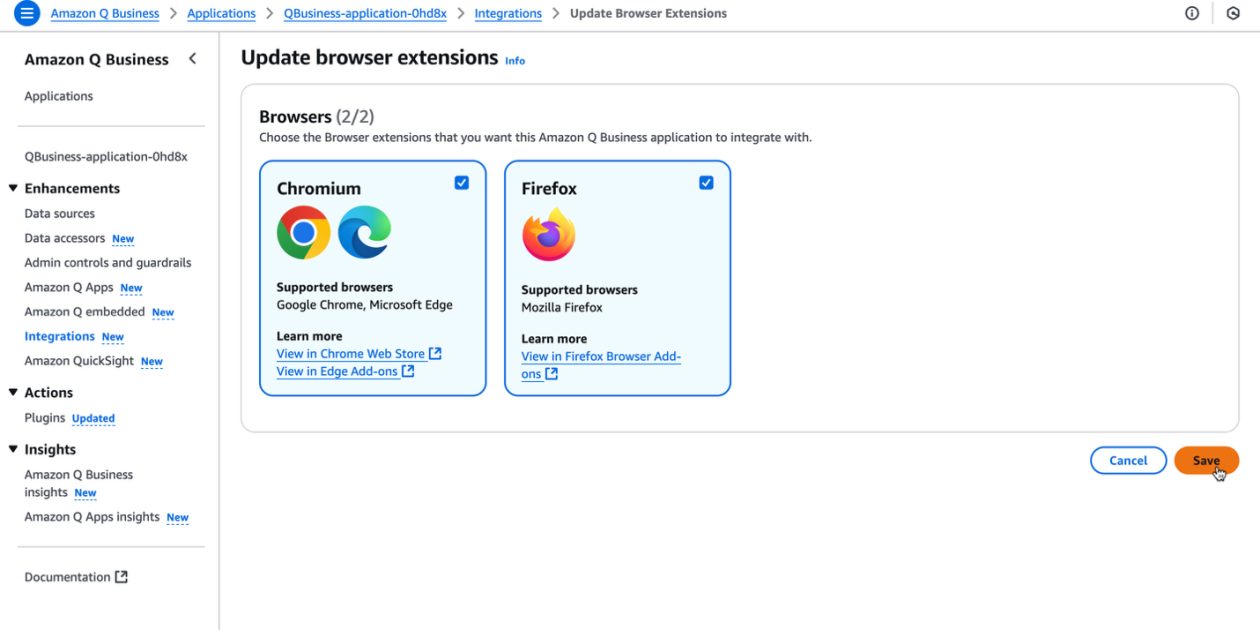

Supercharge your organization’s productivity with the Amazon Q Business browser extension

The Amazon Q Business browser extension brings context-aware, AI-driven assistance to your browser for Lite and Pro subscribers, enabling rapid, source-backed insights and seamless workflows.

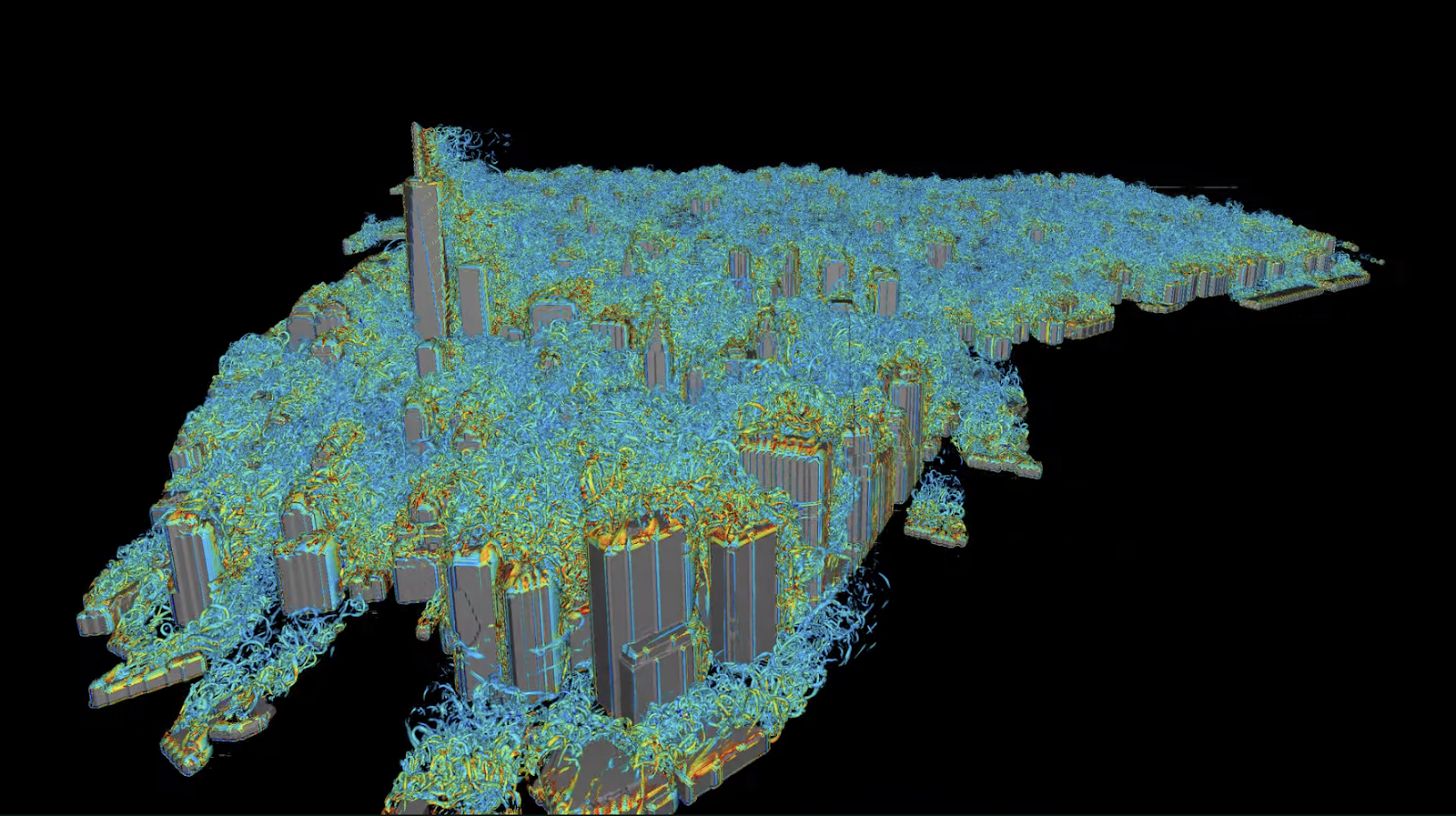

Autodesk Research Brings Warp Speed to Computational Fluid Dynamics on NVIDIA GH200

Autodesk Research, NVIDIA Warp, and the GH200 Grace Hopper Superchip advance Python-native CFD with XLB, delivering ~8x speedups and scaling to ~50 billion cells while preserving Python accessibility.

Teen safety, freedom, and privacy

Explore OpenAI’s approach to balancing teen safety, freedom, and privacy in AI use.