Positive Visions for AI Grounded in Human Wellbeing

Sources: https://thegradient.pub/we-need-positive-visions-for-ai-grounded-in-wellbeing

TL;DR

- Ground AI development in wellbeing science to pursue truly beneficial AI grounded in lived experience. source

- There is no universal theory of wellbeing, but concrete factors like relationships, meaningful work, growth, and positive emotions matter for short- and long-term wellbeing. source

- We need plausible, positive visions of AI-enabled societies, recognizing AI’s potential to reshape institutions and daily life. source

- Foundation models will rapidly advance; steering their deployment to support wellbeing requires new algorithms, wellbeing-based evaluations, and targeted training data. source

- Concrete leverage points include wellbeing-centric product design, measurement, and governance to translate ideas into actionable software and policy. source

Context and background

The essay argues for a pragmatic middle ground on the question of how to ensure AI benefits humanity. It proposes to demystify the term “beneficial AI” by grounding AI development in the science of wellbeing and the health of society. Although the authors come from AI backgrounds, they deliberately connect to fields that study flourishing, such as wellbeing economics, psychology, and philosophy, acknowledging that there is no single, universally accepted theory of wellbeing. The central claim is that human benefit ultimately grounds in the lived experience of people: people want to live happy, meaningful, healthy lives, and AI can be designed to support that aim. source Key factors repeatedly highlighted across disciplines include supportive intimate relationships, meaningful and engaging work, a sense of growth and achievement, and positive emotional experiences. Beyond individual wellbeing, the authors emphasize the importance of societal infrastructure—education, government, markets, and academia—as essential to sustaining wellbeing over years and decades. The piece argues that AI, as a transformative technology, is capable of either contributing to decline in societal wellbeing or supporting improvements, depending on how it is developed and deployed. source There is a paradox: wellbeing is the deep purpose behind much human activity, yet our measures of individual and societal wellbeing show signs of strain—loss of trust, loneliness, political divisiveness, and other indicators. The authors contend that AI’s current trajectory may be complicit in these declines, making it more urgent to align AI with wellbeing objectives. On the bright side, the wellbeing lens reveals that there is no fundamental obstacle to pairing wellbeing science with machine learning to produce collective benefits. source The piece stresses the need for positive visions of AI-enabled futures—worlds in which AI revitalizes institutions, empowers meaningful work and relationships, and helps people pursue what matters to them. This requires imagination, groundedness, and technical plausibility as well as a careful navigation of critiques around technology. The authors argue that now is the time to dream and build toward those visions, taking seriously how foundation models and their deployment will shape everyday life. GPT-2’s rapid improvement example is used to illustrate how quickly models can scale in capability and influence, underscoring the importance of steering development toward wellbeing outcomes. source In short, the authors advocate for grounding AI in wellbeing research, articulating concrete, measurable paths to beneficial AI while acknowledging ongoing debates about the nature of wellbeing itself. They propose tangible leverage points to guide researchers and product teams in turning these ideas into practice. source

What’s new

A core takeaway is that it is acceptable not to have a single, definitive theory of wellbeing. The authors align with a practical view common in wellbeing economics: ground human benefit in the lived experience of people. This allows researchers to move forward with workable measures (for example, PERMA-like frameworks) and to design AI systems that support intimate relationships, engaging work, personal growth, and positive experiences. source The piece argues for plausible positive visions of AI-enabled societies and emphasizes that AI will inevitably shock societal infrastructures, in ways perhaps greater than social media did two decades ago. It calls for deliberate exploration and construction of AI-infused worlds that empower people and strengthen institutions rather than erode them. source A third emphasis is on the arc of foundation models. If future models become substantially more capable, their entanglement with daily life will increase, making it critical to enable these models to understand and support wellbeing through new algorithms, wellbeing-based evaluations of models, and wellbeing-focused training data. The authors outline concrete leverage points for achieving this in research and product development. source Finally, the essay foregrounds practical examples for how wellbeing-aligned AI could function: low-cost but proficient AI coaches, intelligent journaling tools that aid reflection, and apps that help people find friends, partners, or connect with loved ones. These examples anchor the discussion in tangible products while remaining grounded in the broader science of wellbeing. source

Why it matters (impact for developers/enterprises)

For developers and enterprises, this framework translates into a keystone shift: design and evaluate AI systems through the lens of wellbeing. Product roadmaps should incorporate wellbeing metrics and align incentives with real-life wellbeing outcomes rather than abstract success measures alone. Because there is no universal theory of wellbeing, teams can adopt workable measures and build tools that support lived experiences, using frameworks like PERMA as flexible guides rather than rigid end states. source The authors also stress the importance of societal infrastructure in sustaining wellbeing, suggesting that AI should be used to empower and strengthen institutions such as education, governance, and the economy. This implies governance and policy considerations for deployment, data collection, and evaluation that are actionable for product teams and organizational leaders. source On the technical side, the article points to concrete leverage points: developing AI that can understand and support wellbeing, using wellbeing-based evaluations, and curating wellbeing-oriented training data. These steps are presented as practical, near-term moves to bring beneficial AI closer to reality, rather than distant idealism. source The discussion also includes a cautionary note about potential risks if AI deployment bypasses wellbeing considerations. The optimistic path is to pursue actionable, measurable wellbeing outcomes while recognizing the complexity and incompleteness of wellbeing theories. The outcome would be AI that complements human flourishing, supports relationships and purpose, and helps individuals cultivate healthier lifestyles and social connections. source

Technical details or Implementation

- Grounding foundation models in wellbeing: integrate signals related to wellbeing into training, evaluation, and data curation to align model behavior with human flourishing objectives. source

- Use workable wellbeing metrics: adopt frameworks like PERMA (Positive emotions, Engagement, Relationships, Meaning, Achievement) as guiding measures, while recognizing they do not map to a single universal theory. source

- Develop wellbeing-focused data and evaluation: curate training data and evaluation protocols that reflect human experiences and wellbeing outcomes, enabling models to better support users’ wellbeing. source

- Concrete product directions: explore low-cost yet capable AI coaches, intelligent journaling tools for self-reflection, and apps that help users connect with friends, partners, or loved ones. These are cited as practical illustrations of wellbeing-centered AI products. source

- Cross-disciplinary collaboration: bridge wellbeing science with machine learning, ensuring that technical progress is matched by grounding in human flourishing research. source

Key takeaways

- It is acceptable to proceed without a single, definitive theory of wellbeing; practical measures can guide beneficial AI. source

- The goal is plausible positive visions for AI that enhance wellbeing and support flourishing in daily life and institutions. source

- Foundation models will shape society; proactive design is essential to ensure these models understand and promote wellbeing. source

- Actionable leverage points include wellbeing-based evaluation, data curation, and product design that centers human flourishing. source

- The path to beneficial AI requires tying technical work to lived experience and measurable wellbeing outcomes. source

FAQ

-

What is wellbeing?

There is no universal agreement on a single definition, but common ideas include factors like supportive relationships, meaningful work, growth, and positive emotions, grounded in lived experience. [source](https://thegradient.pub/we-need-positive-visions-for-ai-grounded-in-wellbeing/)

-

How can AI support wellbeing in practice?

By enabling tools such as low-cost AI coaches, intelligent journals, and apps that help people connect with others, all designed to reflect practical wellbeing measures. [source](https://thegradient.pub/we-need-positive-visions-for-ai-grounded-in-wellbeing/)

-

How should organizations measure wellbeing-related AI impact?

Use workable wellbeing metrics (e.g., PERMA) as guiding measures and develop wellbeing-based evaluations of models to align outcomes with lived experience. [source](https://thegradient.pub/we-need-positive-visions-for-ai-grounded-in-wellbeing/)

-

What are the risks and how can we address them?

The article cautions that AI could contribute to societal decline if wellbeing considerations are ignored; addressing this requires grounding AI in wellbeing science and pursuing concrete, implementable leverage points. [source](https://thegradient.pub/we-need-positive-visions-for-ai-grounded-in-wellbeing/)

-

What role do foundations play in this approach?

Foundation models and their deployment are central; as models become more capable, it is crucial to ensure they understand and support wellbeing through new algorithms and wellbeing training data. [source](https://thegradient.pub/we-need-positive-visions-for-ai-grounded-in-wellbeing/)

References

More news

Microsoft to turn Foxconn site into Fairwater AI data center, touted as world's most powerful

Microsoft unveils plans for a 1.2 million-square-foot Fairwater AI data center in Wisconsin, housing hundreds of thousands of Nvidia GB200 GPUs. The project promises unprecedented AI training power with a closed-loop cooling system and a cost of $3.3 billion.

Reddit Pushes for Bigger AI Deal with Google: Users and Content in Exchange

Reddit seeks a larger licensing deal with Google, aiming to drive more users and access to Reddit data for AI training, potentially via dynamic pricing and traffic incentives.

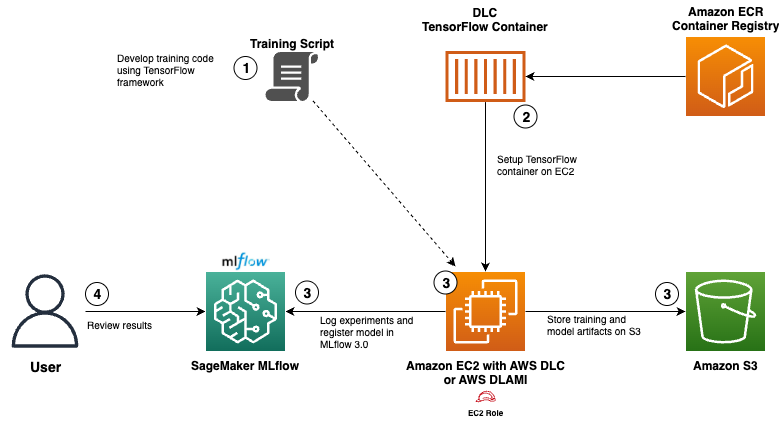

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.

GPT-5-Codex Addendum: Agentic Coding Optimized GPT-5 with Safety Measures

An addendum detailing GPT-5-Codex, a GPT-5 variant optimized for agentic coding within Codex, with safety mitigations and multi-platform availability.

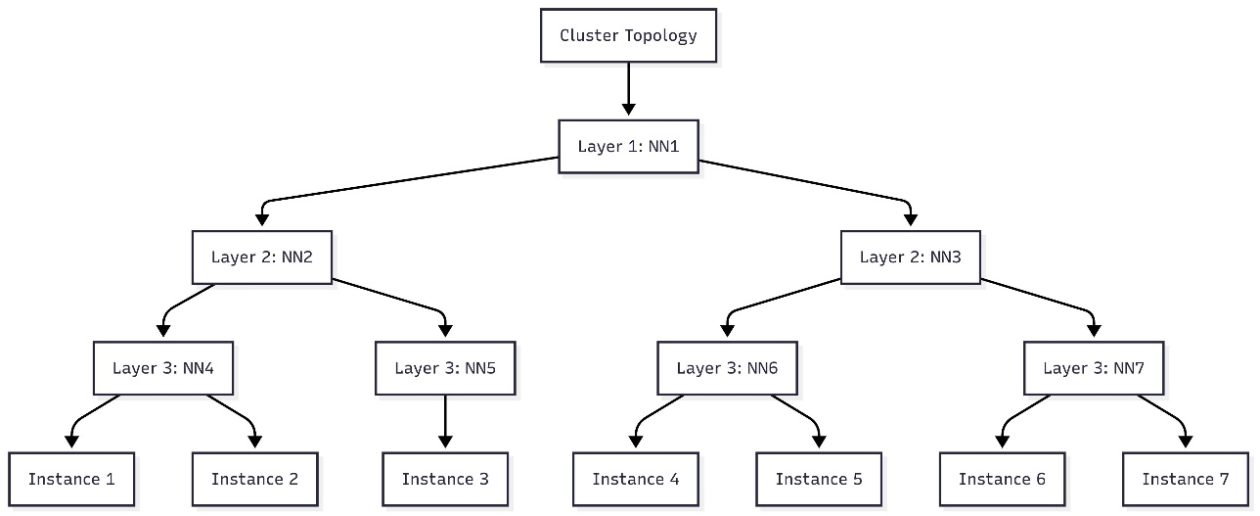

Schedule topology-aware workloads using Amazon SageMaker HyperPod task governance

AWS introduces topology-aware scheduling with SageMaker HyperPod task governance to optimize training efficiency and network latency on EKS clusters, using EC2 topology data to guide job placement.

How Quantization Aware Training Enables Low-Precision Accuracy Recovery

Explores quantization aware training (QAT) and distillation (QAD) as methods to recover accuracy in low-precision models, leveraging NVIDIA's TensorRT Model Optimizer and FP8/NVFP4/MXFP4 formats.