Mamba Explained: A State-Space Model Alternative to Transformers for Million‑Token Contexts

TL;DR

- Mamba replaces Transformer attention with a State Space Model (SSM) for inter-token communication. The Gradient

- It achieves linear scaling in sequence length, enabling long contexts up to around 1 million tokens. The Gradient

- Inference can be up to 5x faster than Transformer-based equivalents, with competitive language performance. The Gradient

- The Mamba-3B model reportedly outperforms Transformers of the same size and matches Transformers twice its size in pretraining and downstream evaluation. The Gradient

- The approach uses a discrete-time SSM with Zero-Order Hold (ZOH) discretisation and Mamba blocks built from A, B, C, D matrices, while preserving traditional MLP-like computation and local convolutions. The Gradient

Context and background

Transformers have been the dominant paradigm in recent AI breakthroughs, largely thanks to attention mechanisms that let tokens look back over long histories. A core limitation of this approach is the quadratic bottleneck in attention: during training, the forward pass scales roughly as O(n^2) with context length n, and autoregressive generation scales at O(n) per token. This makes very long contexts expensive in time and memory, leading to concerns about practical limits on context window size. In the article describing Mamba, the authors argue that to push beyond these limits, one must substitute the attention-based communication channel with an alternative mechanism, while retaining the efficient, non-attention-based computation path (MLPs and similar projections). The Gradient Mamba is positioned within the broader class of models known as State Space Models (SSMs). Rather than using dot-product attention to mediate token-to-token communication, Mamba employs an SSM as the communication backbone and keeps MLP-style projections for computation. This design aims to deliver fast inference, linear scaling in sequence length, and competitive performance across modalities such as language, audio, and genomics. The authors report that Mamba’s language-modeling results—specifically a 3B-parameter variant—outperform Transformers of the same size and match Transformers roughly twice its size in both pretraining and downstream evaluation. The Gradient What makes this approach noteworthy is the framing of time and state: the hidden state h carries the world’s relevant information, and new observations x update the state through a state-space dynamic. This is formalized with continuous-time dynamics that are discretised for practical, discrete-time computation. The paper emphasizes that, in theory, once you have a compact and informative state, you can predict the next action through a compact representation, avoiding the need to retain all past tokens. The Gradient

What’s new

- Mamba replaces the traditional attention mechanism with a Control Theory–inspired State Space Model (SSM) to mediate inter-token communication within a stacked Mamba-block architecture. This substitution aims to retain the benefits of attention (effective communication) while removing its quadratic bottleneck. The Gradient

- To enable discrete-time operation, Mamba uses a discretisation strategy known as Zero-Order Hold (ZOH), transforming continuous-time state dynamics into a practical discrete-time update. This yields a forward path that mirrors RNN-like recurrence while leveraging an SSM for communication. The Gradient

- The approach demonstrates linear scaling in sequence length, with performance claims extending to contexts as long as 1 million tokens. The authors report that Mamba runs faster than Transformer-based models by up to roughly 5x in suitable configurations. The Gradient

- The Mamba-3B model is cited as outperforming Transformers of the same size and matching Transformers twice its size on language modeling benchmarks, both during pretraining and downstream evaluation. The Gradient

- The Mamba framework retains traditional computational components (MLP-style projections, nonlinearities, and local convolutions) while reorganising how communication happens across tokens. This positions Mamba as a potential backbone for diverse modalities beyond language. The Gradient

Why it matters (impact for developers/enterprises)

If Mamba’s claims hold broadly, long-context language models could become more practical for real-world applications: chat systems that remember long histories, document-level reasoning, and multi-modal pipelines that require extended context windows. Linear scaling in sequence length reduces memory pressure and can shorten inference times, potentially lowering operational costs and enabling deployment on hardware with tighter memory constraints. The prospect of achieving competitive performance at very long context lengths also broadens the design space for building agents, assistants, and AI services that rely on long-range dependencies. While still early, the Mamba approach represents a concrete alternative path in the ongoing exploration of scalable sequence models. The Gradient

Technical details or Implementation

- The core substitution is: instead of the Transformer’s Attention (communication) module, Mamba uses a State Space Model (SSM) for communication, while keeping MLP-style projection-based computation. This mirrors the broad architecture of stacked blocks, with the SSM occupying the communication role. The Gradient

- The SSM formulation centers on a hidden state h, its evolution h’(t) = A h(t) + B x(t), and an output equation y(t) = C h(t) + D x(t). The state captures the essential information needed to predict the next token, and the observation x updates the state via a linear transformation. The Gradient

- To apply these dynamics in discrete time, Mamba uses a discretisation step Δ with the Zero-Order Hold (ZOH) method. The discrete update for the hidden state becomes h_

{t+1}≈ (I + Δ A) h_t + (Δ B) x_t, enabling stepwise progression through tokens akin to RNN-like recurrence but with a state-space communication backbone. The Gradient - The A, B, C, D matrices define how the hidden state evolves and how observations are translated into outputs, making the SSM a direct, mechanistic substitute for attention in the Mamba block. The remaining backbone computation—nonlinearities, MLP projections, and local convolutions—remains in the computational path. The Gradient

- The discussion contrasts the efficiency of attention with the compactness of recurrent-like state representations. Transformers provide broad recall across long contexts but are hampered by quadratic memory/time costs; Mamba seeks a Pareto-frontier approach that is closer to the efficiency of RNNs while offering strong performance. The Gradient

Key takeaways

- Mamba introduces a State Space Model as the communication backbone to replace attention in transformers. The Gradient

- It targets linear scaling in context length, enabling practical long-context usage up to about 1 million tokens. The Gradient

- Discretisation via Zero-Order Hold is central to translating continuous-time SSM dynamics into discrete-time updates used for token-by-token processing. The Gradient

- Mamba-3B is reported to outperform Transformers of the same size and match larger Transformers in language modeling benchmarks. The Gradient

- The approach preserves MLP-based computation paths while replacing the communication mechanism, offering a novel design space for scalable sequence modeling. The Gradient

FAQ

-

What is Mamba in simple terms?

Mamba is a neural network backbone that uses a State Space Model to communicate between tokens, instead of the Transformer’s attention mechanism. [The Gradient](https://thegradient.pub/mamba-explained/)

-

How does Mamba handle extremely long contexts?

By using a linear-scaling SSM communication backbone and discretisation (Zero-Order Hold), Mamba aims to support long contexts up to around 1 million tokens. [The Gradient](https://thegradient.pub/mamba-explained/)

-

What about performance and speed?

The authors claim fast inference with up to about 5x speedups over Transformer-based equivalents in suitable settings, along with competitive language modeling performance. [The Gradient](https://thegradient.pub/mamba-explained/)

-

How does the implementation work at a high level?

The hidden state follows h’(t) = A h(t) + B x(t); outputs are y(t) = C h(t) + D x(t); discretisation yields h_{t+1} ≈ (I + Δ A) h_t + (Δ B) x_t using a ZOH scheme. [The Gradient](https://thegradient.pub/mamba-explained/)

-

Are there caveats or limits mentioned?

The source emphasizes the potential and theoretical appeal of the SSM approach and reports promising results, but it presents these claims within the context of ongoing research and evaluation. [The Gradient](https://thegradient.pub/mamba-explained/)

References

More news

NVIDIA HGX B200 Reduces Embodied Carbon Emissions Intensity

NVIDIA HGX B200 lowers embodied carbon intensity by 24% vs. HGX H100, while delivering higher AI performance and energy efficiency. This article reviews the PCF-backed improvements, new hardware features, and implications for developers and enterprises.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.

Speculative Decoding to Reduce Latency in AI Inference: EAGLE-3, MTP, and Draft-Target Approaches

A detailed look at speculative decoding for AI inference, including draft-target and EAGLE-3 methods, how they reduce latency, and how to deploy on NVIDIA GPUs with TensorRT.

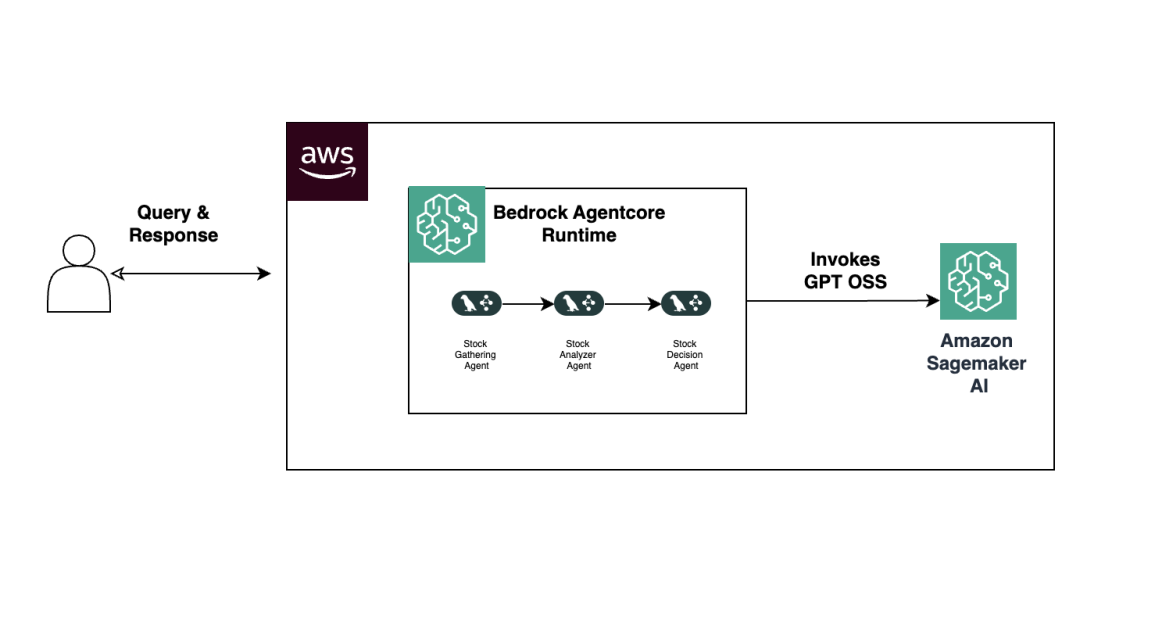

Build Agentic Workflows with OpenAI GPT OSS on SageMaker AI and Bedrock AgentCore

An end-to-end look at deploying OpenAI GPT OSS models on SageMaker AI and Bedrock AgentCore to power a multi-agent stock analyzer with LangGraph, including 4-bit MXFP4 quantization, serverless orchestration, and scalable inference.