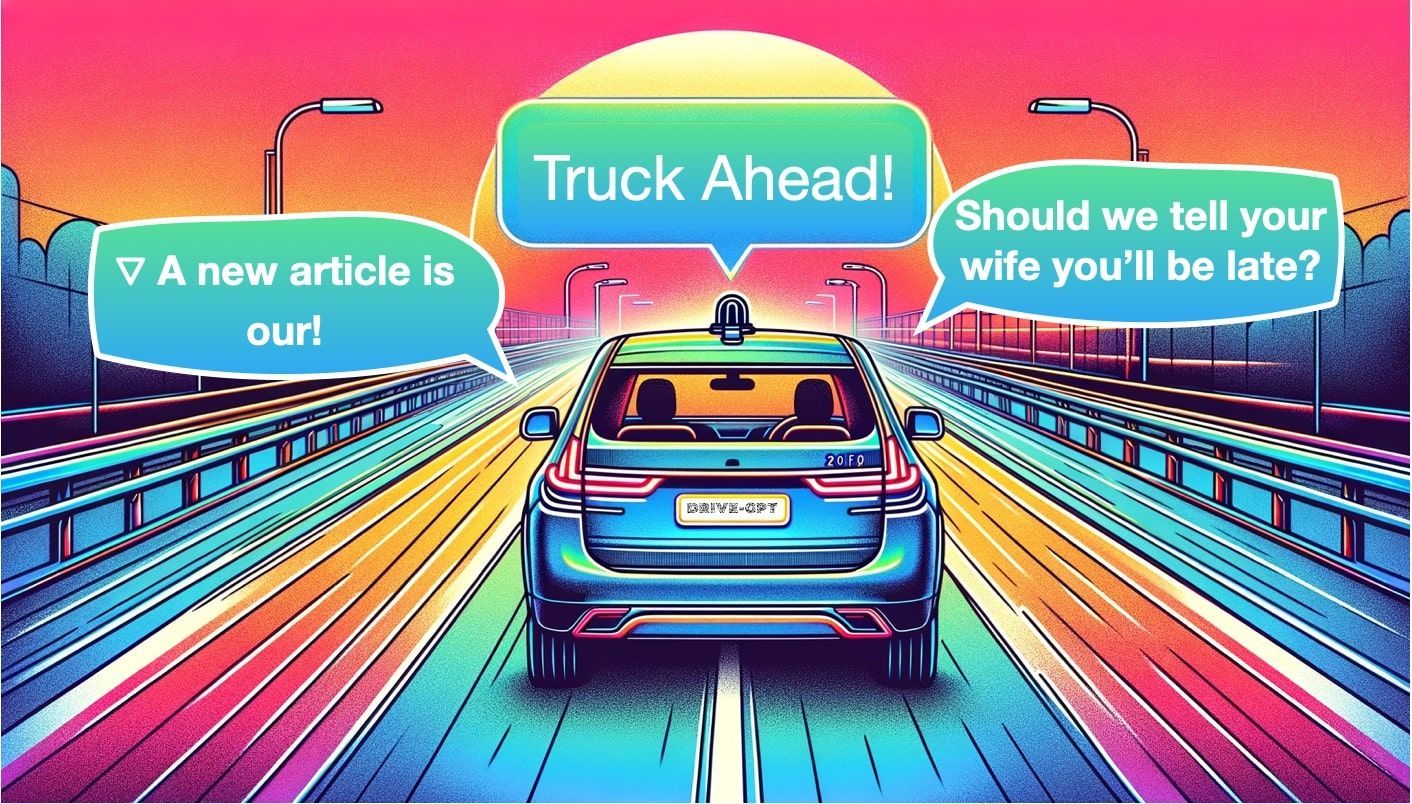

Car‑GPT: Can Large Language Models Unlock Practical Self‑Driving Cars?

Sources: https://thegradient.pub/car-gpt

TL;DR

- Large Language Models are being explored to augment autonomous driving across perception, planning, and scenario generation, moving beyond traditional modular pipelines.

- Representative efforts span perception (object detection and BEV maps), planning (LLMs suggesting driving decisions), and generation (video-like scene generation from prompts). Notable examples include Talk2BEV, DriveGPT, GAIA‑1, MagicDrive, Driving Diffusion, and related work.

- Trust and reliability are central concerns: potential hallucinations, black‑box issues, and the need for live‑edge validation; the field is in early stages with the first wave of papers around mid‑2023.

- The potential includes new data loops and in‑car QA capabilities (a Grok‑style Q&A in vehicles), but practical deployment requires rigorous testing and safeguards. The Gradient

Context and background

Self‑driving technology has historically followed a modular approach: perception, localization, planning, and control operate as separate components. This structure enabled focused development but also created integration gaps and debugging challenges. A contrasting line of research has pushed toward End‑To‑End learning, where a single neural network ostensibly replaces multiple modules. While End‑To‑End methods are known, they remain imperfect and raise concerns about determinism and interpretability. The article uses a historical analogy to Penicillin, suggesting that a breakthrough might arise from an unexpected application of a powerful tool. In the case of autonomous driving, Large Language Models (LLMs) are being studied as a potential driver for perception, planning, and scenario generation. The article explains LLMs in terms of tokens, tokenization, and Transformer blocks, emphasizing core ideas like next‑word prediction, encoder/decoder architectures, and the role of attention. The Gradient In this landscape, the most active research areas in 2023 have been in three domains: perception (turning image inputs into structured outputs such as objects and lanes), planning (defining driving trajectories), and generation (producing output such as future scenarios). Notable examples and related methods expand beyond simple prompts to systems that combine perception outputs with language‑based prompting to refine attention, crop regions, or select targets for further analysis. The article highlights several lines of work and demonstrations that illustrate how language models could be wired to perception plus decision making, including Bird’s Eye View (BEV) representations and multi‑view inputs, along with models that attempt to assign persistent IDs to tracked objects using matching algorithms like the Hungarian method. The Gradient

What’s new

The piece presents three prominent families of LLM usage in self‑driving:

- Perception: LLMs aid object detection, lane detection, and 3D localization by combining outputs from conventional perception pipelines with language‑assisted refinement. Pioneering demonstrations show how prompts can guide the system to focus on specific regions or objects and how IDs can be assigned to tracked entities using matching techniques. Examples discussed include HiLM‑D, MTD‑GPT, and PromptTrack, which illustrate capabilities such as identifying specific objects and maintaining consistent IDs across frames. The Gradient

- Planning: LLMs contribute to driving decisions by reasoning over perception outputs. Talk2BEV is highlighted as a notable approach that uses Bird’s Eye View inputs and language‑driven guidance to propose driving actions, crop regions for closer analysis, and predict trajectories. The idea is to leverage the strengths of language models for interpretation and instruction following while keeping a grounded perception backbone. DriveGPT is another example that connects perception outputs to a language model to generate driving trajectories. The Gradient

- Generation: Some models aim to generate scenes or future scenarios to expand data and simulate diverse conditions. Wayve’s GAIA‑1 model can take text and images to produce videos by employing a World Model that encodes world dynamics and interactions. MagicDrive uses perception outputs to synthesize driving scenes, while Driving Into the Future and Driving Diffusion explicitly generate future scenarios from current states. The overall message is that language‑assisted generation can create rich data and test cases, enabling broader coverage for training and evaluation. The Gradient In sum, the article identifies three active families of LLM usage in autonomous driving—Perception, Planning, and Generation—and uses concrete examples to illustrate how language models could augment or reinterpret traditional driving stacks. It also acknowledges that many of these ideas are exploratory and not yet deployed in live driving. The Gradient

Why it matters (impact for developers/enterprises)

- Transparency versus black‑box risk: Traditional deep learning systems in driving are already subjected to scrutiny for opaqueness. LLMs introduce an additional layer of interpretability through language‑driven prompts and reasoning, but they also raise new questions about reliability and debugability. The author notes that LLMs are becoming more transparent over time, yet live, on‑road deployment remains unproven and requires careful validation. The Gradient

- Data generative potential: By producing or augmenting driving scenarios, captions, and reasoning traces, LLM‑augmented systems could expand the data ecosystem for training perception, planning, and evaluation tools. This can help build more robust QA loops and scenario libraries, potentially improving generalization across edge cases.

- Hybrid approaches as a bridge: Rather than replacing the entire stack, LLMs are framed as components that enrich perception outputs, propose driving decisions, or generate future scenes, while the underlying perception and planning modules remain grounded in deterministic methods. This hybrid stance may be more acceptable to regulators and practitioners seeking safety assurances. The Gradient

- Road to trust and safety: The article emphasizes the early stage of live deployments and the importance of addressing hallucinations and determinism. For developers and enterprises, this implies layered testing, risk assessment, and conservative deployment strategies before any “in‑car” use cases mature. The Gradient

Technical details or Implementation

- A quick primer on LLMs: The article reviews how tokenization converts text into numbers for neural networks, and how Transformer blocks with multi‑head attention and cross‑attention compose the model, whether encoder‑decoder, encoder‑only, or decoder‑only (as in GPT‑style models). The core task remains next‑word prediction, but applied to driver‑relevant outputs such as descriptions of scenes or plans. The Gradient

- Perception, planning, and BEV integration: In perception, an encoder–decoder or related architecture is used to detect objects, lanes, and to localize in 3D. BEV (Bird’s Eye View) representations simplify spatial reasoning and can be paired with language prompts to refine detections and focus. Planning then leverages LLM reasoning over these outputs to propose trajectories or adjustments, sometimes using language to guide attention to specific regions. The idea is to blend traditional perception with language‑guided refinement to produce more informed decisions. The Gradient

- Data and identity tracking: Several works discuss maintaining consistent IDs for objects across frames, employing methods like bipartite graph matching (e.g., the Hungarian Algorithm) to associate detections over time. This helps create coherent scene understandings that a language model can reason about. The Gradient

- Advanced generation and world modeling: For generation, models like GAIA‑1 ingest text and images and output videos by leveraging a World Model that represents the world dynamics and agents’ interactions. This illustrates how language‑conditioned video generation can produce plausible driving scenarios, enriching data generation and evaluation pipelines. Other examples (MagicDrive, Driving Into the Future, Driving Diffusion) demonstrate scenario synthesis driven by perception outputs. The Gradient

- Current limitations and trust considerations: The author cautions that adopting LLMs in live driving faces trust barriers due to potential hallucinations and the ongoing need to keep systems transparent and auditable. A fully deterministic, verifiable stack remains a prerequisite for widespread adoption, even as the field experiments with “language‑enhanced” perception and planning. The Gradient

Key takeaways

- LLMs are being explored as augmenters to perception, planning, and scenario generation in autonomous driving, rather than as drop‑in replacements for all modules.

- BEV representations and multi‑view inputs play a central role in enabling language‑assisted perception and reasoning.

- Generation capabilities open opportunities for data expansion and evaluation through synthetic scenes and future states.

- Trust, safety, and determinism remain critical hurdles to live deployment; the field is still early, with mid‑2023 papers initiating much of this discourse.

- A hybrid approach that combines language‑driven insights with grounded perception and planning may offer a practical path forward before fully end‑to‑end systems mature. The Gradient

FAQ

-

What are the main application areas for LLMs in self‑driving according to the article?

Perception, planning, and generation are highlighted as the three core application areas where LLMs can contribute to driving decisions, scene understanding, and scenario creation. [The Gradient](https://thegradient.pub/car-gpt/)

-

Are LLMs ready for live deployment in cars?

The article argues that it is still early to tell; live, in‑car use is not yet established, and trust, safety, and determinism remain significant concerns requiring thorough validation. [The Gradient](https://thegradient.pub/car-gpt/)

-

What are some concrete models or examples cited?

Examples include Talk2BEV for planning with BEV inputs, DriveGPT for connecting perception to driving trajectories, Wayve’s GAIA‑1 for text‑and‑image‑driven video outputs, and generation systems like MagicDrive and Driving Diffusion. [The Gradient](https://thegradient.pub/car-gpt/)

-

How do researchers address object tracking in these systems?

The discussion includes maintaining unique IDs for tracked objects and using matching algorithms such as the Hungarian Algorithm to associate detections over time. [The Gradient](https://thegradient.pub/car-gpt/)

References

- The Gradient. Car‑GPT: Can Large Language Models Unlock Practical Self‑Driving Cars? https://thegradient.pub/car-gpt/

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

Google expands Gemini in Chrome with cross-platform rollout and no membership fee

Gemini AI in Chrome gains access to tabs, history, and Google properties, rolling out to Mac and Windows in the US without a fee, and enabling task automation and Workspace integrations.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.