Why Doesn’t My Model Work? Common ML Pitfalls, Real Examples, and Fixes

TL;DR

- Models can appear to perform well on test data yet fail in real-world deployment due to data-related issues, not just modeling flaws. This is often driven by misleading data, hidden variables, and spurious correlations.

- Data labeling biases, mislabels, and implicit subjectivity can distort model learning and evaluation, inflating perceptions of accuracy.

- Data leakage and look-ahead bias hijack model evaluation by leaking information from outside the intended training signal, leading to overoptimistic performance.

- Practical fixes include careful data handling, post-hoc explainability checks, and adopting structured evaluation practices such as the REFORMS checklist for ML-based science.

- If you want a concise guide to the kinds of pitfalls and how to prevent them, see the surrounding discussion and concrete examples in this article. For further context and examples, the author highlights Covid-era prediction work, datasets with hidden variables and mislabellings, and cases where models learned to rely on non-causal signals. See the linked discussion for detailed cases and mitigation strategies: The Gradient: Why Doesn’t My Model Work? Common ML Pitfalls, Real Examples, and Fixes.

Note: The article also references broader debates about reproducibility and trust in published ML results across scientific disciplines and highlights resources like the AIAAIC repository to illustrate real-world pitfalls.

Context and background

Machine learning processes are deeply data-dependent. When data quality is compromised, models can achieve high metrics on a test split without delivering real-world utility. Covid-19 prediction efforts showcased this risk: public datasets may contain misleading signals such as overlapping records, mislabellings, and hidden variables that support accurate class labels without learning anything causal about the outcome. This phenomenon—garbage in, garbage out—remains a central concern for researchers and practitioners alike. Hidden variables are features present in data that happen to predict class labels within a given dataset but are not causally related to the target task. In Covid chest imaging, for example, the orientation of the body (sick patients scanned lying down vs. standing healthy individuals) can become a predictor even if it is not medically relevant. Such signals can yield models that appear strong on historical data but fail to generalize to new cases. In practice, hidden variables are sometimes visible in the data as boundary markers, watermarks, or timestamps embedded in images that help separate classes without analyzing the actual signal of interest. Spurious correlations are patterns that align with labels in a dataset yet have no causal relationship to the prediction task. A well-known, illustrative case is a tank detection dataset where the model inadvertently learned to rely on background cues like weather or time-of-day instead of identifying the tanks themselves. Deep learning models can readily pick up such spuriously correlated patterns, which undermines generalization and makes them vulnerable to adversarial perturbations that flip predictions by altering background pixels. Saliency maps and explainability tools often reveal when a model attends to background regions rather than to meaningful features, signaling a generalization risk. Labeling quality also matters. When humans assign labels, biases, misassumptions, or simple mistakes can corrupt the dataset, producing mislabels that masquerade as ground truth. Benchmark datasets such as MNIST and CIFAR have demonstrated non-trivial mislabelling rates, which can inflate reported accuracy and obscure whether improvements reflect genuine model advances or labeling noise. Subjectivity in labeling is particularly acute in sentiment analysis and other areas where human judgments vary. Beyond data quality, the pipeline itself can introduce errors. Data leakage occurs when information the model should not see during training is inadvertently used in the training process. This is especially common when preprocessing steps (centering, scaling, feature selection, dimensionality reduction, augmentation) are performed on the entire dataset before splitting into train and test sets, thereby leaking information about the test distribution into the model. In time-series forecasting, look-ahead bias—where future information leaks into training samples—can create artificially optimistic test performance. The author emphasizes that many pitfalls are not about algorithmic complexity but about data handling and evaluation design. The recent emphasis on ML-driven science includes recommending structured checks, such as the REFORMS checklist, to decouple data quality and evaluation from model-centric narratives. For readers seeking deeper exploration of these issues, the article points to reviews and case studies that document how such pitfalls have manifested in real-world models and how researchers attempted remediation.

What’s new

The article foregrounds several practical pitfalls that routinely trip up ML deployments and provides concrete illustrations of how these can arise in real datasets. It highlights how models can look impressive on conventional test sets yet perform poorly in the wild due to signals that are not causally connected to the target task. In response, the piece advocates adopting explicit checks and safeguards, including:

- Using explainability tools to verify that the model relies on meaningful input features rather than background cues or metadata.

- Detecting hidden variables by examining whether seemingly predictive cues (like image orientation or watermarks) are driving decisions.

- Identifying and mitigating spurious correlations present in common benchmarks and how such correlations can reduce generalization.

- Recognizing data labeling biases and quantifying their potential impact on evaluation metrics.

- Preventing data leakage by ensuring train/test separation is respected across preprocessing steps and by avoiding look-ahead information in time-series tasks.

- Being mindful of augmentation practices that might inadvertently leak information from the test set into the training set.

- Applying the REFORMS checklist as a structured approach to ML-based scientific workflows and reporting. The piece also frames these issues within the broader context of reproducibility and trust in published ML results, noting that many missteps have been observed in both scientific and popular discourse. See the linked discussion for detailed case studies and mitigations.

Why it matters (impact for developers/enterprises)

For developers and enterprises, these pitfalls translate into real-world risk: models that appear robust in controlled testing can fail when confronted with real-world data distributions, different data collection processes, or new environments. The consequences include suboptimal performance, costly redeployments, and erosion of trust in ML-derived insights. Data-centric failures—misleading data, hidden variables, and spurious correlations—undermine generalization, while data leakage and look-ahead bias inflate metrics and mask underlying deficiencies in evaluation pipelines. Practically, organizations should adopt disciplined data handling and evaluation practices. Recognizing that many problems originate in data quality and process design rather than in the core modeling approach is crucial. The REFORMS checklist offers a structured way to frame ML-based scientific workflows, encouraging explicit scrutiny of data provenance, labeling, dataset splits, preprocessing, and validation strategies. By foregrounding data-centric considerations, teams can reduce the likelihood of deploying models that perform well in theory but fail in production.

Technical details or Implementation

This section summarizes the key pitfalls and concrete mitigations discussed in the article, with an emphasis on actionable checks you can apply in practice.

Misleading data and data quality issues

- Data quality directly underpins model utility. In high-stakes contexts like health or public safety, misleading signals in data (overlapping records, mislabels, hidden variables) can drive models to achieve high test scores while offering little real-world value.

- Practical mitigations include auditing datasets for overlaps and mislabeled entries, and validating that predictive signals align with domain understanding rather than incidental correlations.

Hidden variables

- Features present in data that correlate with labels but are not causally related to the task can mislead models during training.

- In imaging data, the orientation of a patient or other non-disease-related cues can become predictive proxies.

- Mitigations include dataset design that minimizes such cues, and using explainability techniques to verify that decisions rely on medically relevant features rather than incidental indicators.

Spurious correlations

- Deep learners can capture correlational patterns that do not generalize beyond the training distribution, especially when background contexts are spuriously aligned with labels.

- Example-style scenarios show models leveraging color or layout cues rather than object identity.

- Mitigations include scrutiny of model attention with saliency maps, adversarial training to expose reliance on fragile cues, and ensuring that evaluation datasets are representative of real-world variability.

Labeling biases and mislabelling

- Human labeling can introduce biases or mistakes that distort evaluation. Benchmark datasets like MNIST and CIFAR have documented mislabelling rates that can affect perceived gains.

- Mitigations include cross-checking labels, quantifying labeling uncertainty, and accounting for potential biases when interpreting accuracy gains.

Data leakage and look-ahead bias

- Data leakage occurs when the training pipeline accesses information that should not be available during training, often through preprocessing that uses the entire dataset before a train/test split.

- Examples include centering/scaling performed on the full dataset, feature selection conducted prior to split, and inappropriate data augmentation that contaminates the test set.

- Look-ahead bias is a specific form of leakage common in time-series forecasting, where future information leaks into training samples, inflating test performance.

- Mitigations include performing all data-dependent preprocessing strictly after the training/test split, using time-aware cross-validation for temporal data, and avoiding augmentation practices that introduce test data into training.

Implementation checklist and the REFORMS approach

- The article endorses the REFORMS checklist as a structured approach to ML-based science, promoting explicit consideration of data provenance, feature handling, and evaluation integrity.

- Practitioners should implement data audits, separate preprocessing pipelines for train/validation/test, and transparent reporting of data-related limitations.

Table: Pitfalls, impacts, and mitigations (illustrative guide)

| Pitfall | Example/Impact | Mitigation |---|---|---| | Misleading data and hidden variables | Models appear strong on test data but rely on non-causal cues | Audit datasets for overlapping records and hidden variables; use explainability tools to verify feature usage |Data leakage and look-ahead bias | Overoptimistic test performance in time-series or preprocessed data | Ensure preprocessing is performed after train/test split; use proper time-based validation; avoid leakage through augmentation |Spurious correlations | Background cues drive predictions (e.g., color cues in images) | Examine saliency maps; employ adversarial training and robust evaluation across diverse contexts |

Key takeaways

- High test accuracy does not guarantee real-world utility; data issues frequently masquerade as model flaws.

- Hidden variables, mislabellings, and spurious correlations are common sources of poor generalization in ML.

- Data leakage and look-ahead bias inflate performance metrics and must be prevented through careful data handling and evaluation design.

- Explainability checks (e.g., saliency maps) can reveal reliance on non-causal cues and guide corrective action.

- Structured practices like the REFORMS checklist provide a practical framework to improve ML-based scientific workflows and reporting.

FAQ

-

What is data leakage?

It occurs when the model training pipeline has access to information it shouldn’t during training, such as performing data-dependent preprocessing on the entire dataset before splitting into train and test sets, leading to overestimated performance.

-

What are hidden variables?

Features present in data that are predictive of labels in the data but not genuinely related to the target task; models can latch onto them and fail to generalize.

-

How can I detect spurious correlations in my model?

Look for reliance on background cues or patterns that do not align with the actual task; use explainability methods like saliency maps and test on varied contexts to assess generalization.

-

What is look-ahead bias in time-series tasks?

A form of data leakage where future information leaks into the training data, artificially improving test performance; mitigate with proper time-based splits and validation.

-

How does REFORMS help in ML-based science?

It provides a structured checklist to scrutinize data provenance, preprocessing, evaluation, and reporting, reducing common pitfalls in ML research.

References

More news

Microsoft to turn Foxconn site into Fairwater AI data center, touted as world's most powerful

Microsoft unveils plans for a 1.2 million-square-foot Fairwater AI data center in Wisconsin, housing hundreds of thousands of Nvidia GB200 GPUs. The project promises unprecedented AI training power with a closed-loop cooling system and a cost of $3.3 billion.

Reddit Pushes for Bigger AI Deal with Google: Users and Content in Exchange

Reddit seeks a larger licensing deal with Google, aiming to drive more users and access to Reddit data for AI training, potentially via dynamic pricing and traffic incentives.

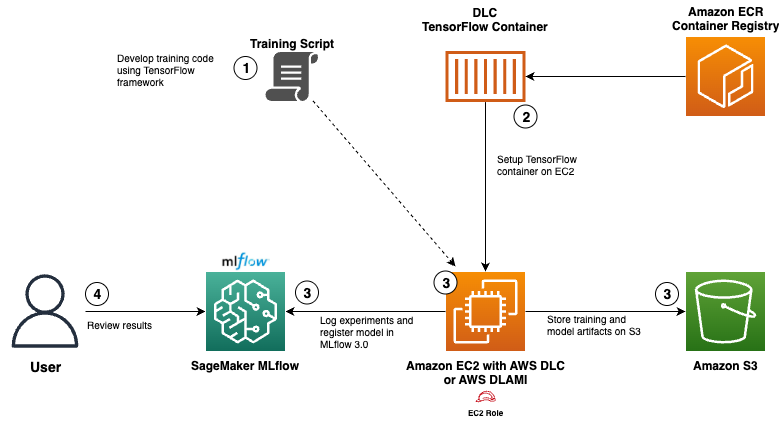

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.

GPT-5-Codex Addendum: Agentic Coding Optimized GPT-5 with Safety Measures

An addendum detailing GPT-5-Codex, a GPT-5 variant optimized for agentic coding within Codex, with safety mitigations and multi-platform availability.

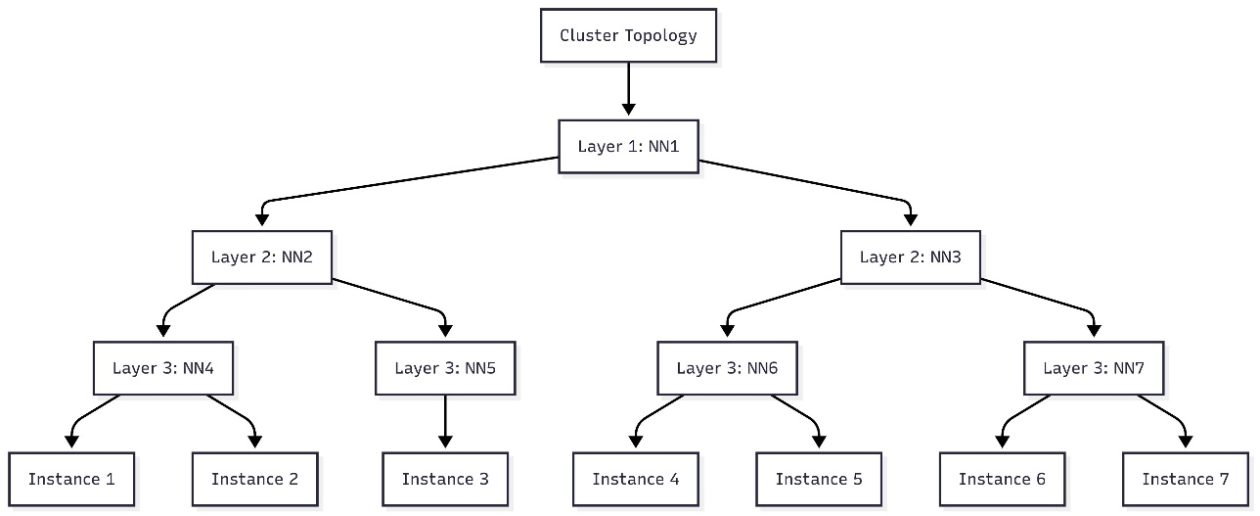

Schedule topology-aware workloads using Amazon SageMaker HyperPod task governance

AWS introduces topology-aware scheduling with SageMaker HyperPod task governance to optimize training efficiency and network latency on EKS clusters, using EC2 topology data to guide job placement.

How Quantization Aware Training Enables Low-Precision Accuracy Recovery

Explores quantization aware training (QAT) and distillation (QAD) as methods to recover accuracy in low-precision models, leveraging NVIDIA's TensorRT Model Optimizer and FP8/NVFP4/MXFP4 formats.